- 🌍 EU member states approve the world’s first major AI regulation.

- 📜 The AI Act sets comprehensive rules for AI technologies.

- 💶 Companies breaching the AI Act may face fines up to 35 million euros or 7% of global revenues.

- 🚫 The law bans high-risk AI applications like “social scoring” and predictive policing.

- 🚗 High-risk systems include autonomous vehicles and medical devices.

- 🇺🇸 US tech firms developing AI are closely monitoring this regulation.

- 🕒 Generative AI systems will have a transition period of up to 36 months to comply.

- 🛡 The law mandates transparency, routine testing, and cybersecurity for general-purpose AI.

- ⚖️ Effective implementation and enforcement of the AI Act are top priorities now.

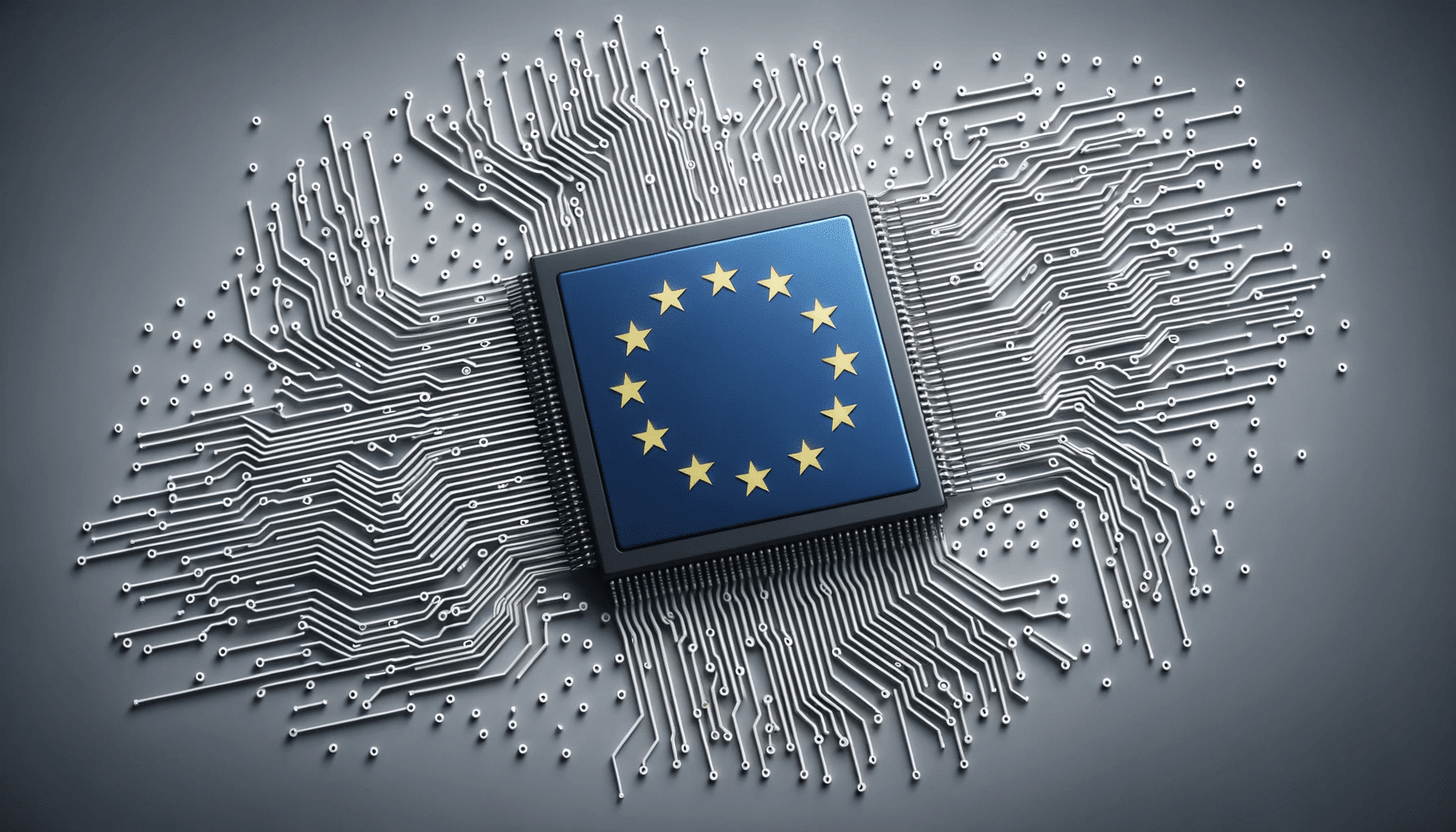

Artificial intelligence is one of the most transformative technologies of our time, revolutionizing industries and optimizing processes across various sectors. However, the use of AI also comes with significant ethical and societal risks. Recognizing this, the European Union (EU) has taken a monumental step by formally approving the AI Act, the world’s first major regulation designed to govern AI technologies. Here’s a comprehensive look at what the Act entails and its implications on a global scale.

The Necessity for AI Regulation

In recent years, AI technologies have advanced at an unprecedented rate. From autonomous vehicles to predictive policing, AI’s capabilities are rapidly expanding. However, these innovations bring about critical concerns related to ethics, privacy, and security. The AI Act aims to address these issues by setting a legal framework for the development, deployment, and utilization of AI within the EU.

Key Elements of the AI Act

Comprehensive Rules for AI Technologies

The AI Act is designed to establish a robust set of guidelines to ensure that AI technologies are developed responsibly. This includes:

- Transparency: Companies must disclose how their AI models are trained.

- Routine Testing: Regular assessments to ensure compliance and performance.

- Cybersecurity: Adequate protections against potential cyber threats.

Penalties for Non-Compliance

Companies that violate the AI Act face severe penalties. The EU Commission has the authority to impose fines up to:

- 35 million euros, or

- 7% of their annual global revenues, whichever is higher.

These stringent penalties underscore the importance of adhering to the new regulations.

High-Risk AI Applications

The AI Act adopts a risk-based approach, categorizing AI applications based on their potential societal impact. High-risk applications are subject to stricter regulations:

Banned Applications

- Social Scoring: Systems that rate citizens based on aggregated data, often infringing on personal privacy.

- Predictive Policing: AI systems used to predict criminal activity, which could lead to discriminatory practices.

High-Risk Systems

- Autonomous Vehicles: AI used in self-driving cars must undergo rigorous testing to ensure safety.

- Medical Devices: AI applications in healthcare must comply with strict standards to protect patient safety and privacy.

Impact on Global Tech Firms

With the implementation of the AI Act, U.S. tech giants and other global players developing AI must take heed. Companies like Google, Microsoft, and OpenAI will need to ensure their products are compliant with these new regulations. The Act promises significant implications for the development, deployment, and marketing of AI technologies in the EU.

Generative AI Systems: Transition and Compliance

Generative AI systems, referred to as “general-purpose” AI under the Act, are given a transition period before full compliance is enforced:

- 12 Months: Initial period after the AI Act comes into force.

- 36 Months: An additional transition period specifically for generative AI systems to become fully compliant.

Focus on Ethical AI Development

Belgium’s Secretary of State for Digitization, Mathieu Michel, emphasized the importance of trust, transparency, and accountability in AI advancements. The new regulation reflects the EU’s commitment to fostering an environment where ethical AI can thrive and boost innovation.

Implementation and Enforcement

The real challenge lies in the effective implementation and enforcement of the AI Act. It’s crucial for both regulatory bodies and AI developers to work together to ensure these regulations are upheld. This collaborative effort will be vital for safeguarding the ethical use of AI while promoting technological advancement.

Conclusion: A New Landscape for AI

The EU AI Act is a pioneering regulatory framework that sets a new global standard for AI governance. By addressing the ethical and societal risks associated with AI, the Act aims to create a safer and more transparent environment for AI development. As the world watches closely, this landmark regulation could serve as a blueprint for other nations grappling with the rapid rise of artificial intelligence.